Preamble - Performance still is important

Sooner or later, the performance of any application you’re using becomes important. It may start off performing quite well, and then begin to slow down and grind to a halt over time. In a few cases, it may perform abysmally right from the launch.

I spent more than two decades at IBM (starting in 1970, now long retired) and a fair bit of that was taken up advising, supporting and troubleshooting IBM customers on a broad range of performance-related matters. Forty years on, performance is no less important as we approach the end of the first decade of this 21st century.

We don’t usually notice a system’s performance at all when it is good, but we certainly notice it when it is slow. Think Google search, nearly always sub-second (which now we take for granted, and only notice the extremely rare slowdown), versus some other web applications that run at tortoise-like speeds. Overall, the performance of a system could be summarized as “what the end user sees and accepts as reasonable” for whatever applications they are running.

Once upon a time computing (or “data processing”) was nearly all in the form of batch batch processing on centralized machines, then along came mini-computers (smaller than corporate machines, typically in corporate divisions or departments or smaller businesses), then desktop machines (like the IBM Personal Computer, or PC), nowadays right down to handheld devices (PDAs, mobile phones, netbook PCs, etc).

As a general statement, the overall observed performance is the sum of the individual performance of each in a series or chain of stages involving different hardware/software components: central processing unit (CPU), main storage (RAM), buffers of various types, channels or similar data paths, communication links, the operating system binding it all together, and finally the applications being executed.

Then there’s the speed of movement of data between the stages: to and from non-volatile storage (persistent, long-term storage) on devices such as paper tape or punched cards in the early days, magnetic media (disks, tapes, diskettes), flash memory (getting faster and cheap enough to soon become widespread for bulk storage in the gigabyte range), and who knows what in the future (quantum storage, holographic storage, carbon nanotube storage, or whatever might eventuate).

The overall performance of a transaction — the time from when a user requests something to be done until the last bit of the result is served back — is the sum of the performance of each and every link or step in the device chain. It depends on the not just the raw speed characteristics of each step, but on the workload being imposed (often in a shared user environment, such as a Web server).

There are nearly always complex interactions between steps and at each stage in the overall process: queuing for service, task execution (at some relative priority and , for some length of time or “time slice”, perhaps getting preempted and dropping back in the queue), recovering from errors (often badly designed and handled), and more. As I said earlier, it’s a very complex picture.

Sometimes you encounter poor performance because of inadequately funded hardware (and perhaps software), poor infrastructure design (low-powered servers, slow communications links, and the like).

But quite frequently it’s a matter of poor application design: bad or even erroneous coding, choosing the wrong algorithm for a sub-task, inadequate or even non-existent error handling, and much more.

Even an otherwise excellent service can be brought to its knees by a bad application, such as one with an extremely inefficient sorting algorithm, one that retrieves a data record in an extremely inefficient manner, one that waits for an error that is never going to be recovered from. One classic example is the deadlock or so-called deadly embrace record update situation, which can bring even the fastest of systems to a dead halt in processing your transaction (and at the very least locks out one other user too, but possibly more).

IBM Lotus Notes and Domino performance considerations

Here I’d like to share my findings on one aspect of performance that I haven’t come across being covered elsewhere, at least in the way that I’m going to explain it: the analysis of Notes/Domino view size as it relates to view indexing performance.

You’ll want to know how much hard disk capacity is needed to store the view indexes (indices, if you prefer) in your Notes applications, and from this get some feel for the effect on view index maintenance processing overheads which can have a major effect on overall Domino server transaction throughput and response times.

There are many resources from IBM and other parties which give excellent advice and guidance about analyzing and managing performance for both the Lotus Notes desktop client and the Lotus Domino server. I’ve no intention of going over this broad field, having already assembled many useful reference links for you at my web site here and its mirror/backup here.

Many of these (and other forums/blogs maintained by the Notes community) discuss the design of Lotus Notes views. Some of them give excellent tips for optimizing the performance of Notes views, either by optimizing view design (many considerations) or setting the properties of the views such as index refresh/discard options:

What follows is a brief discussion of views as they relate to NotesTracker (see here or here). I gathered this information when a user of NotesTracker asked me how to predict the size of the Usage Log repository database, and to give some guidance on when it should be archived.

NotesTracker concepts

NotesTracker is a set of easy-to-apply routines that you (once a licensed purchaser) can easily apply to the design of any of your own Notes/Domino applications. Read more about it in the NotesTracker Guide, a download link for which is on on either of the web pages mentioned a few paragraphs above.

Think of NotesTracker as a software development kit (SDK). Once you have modified the design of any of your applications, NotesTracker can write out a “usage log record” for each and every user interface transaction against that database: document CRUD events (Create, Read, Update, Delete), document paste-ins, document mail-ins.

You control what NotesTracker does via a NotesTracker Profile that you place in each database (on a replica by replica basis). For example, in the case of document update event you can specify whether or not field changes are tracked, and on top of that whether or not an e-mail alert is sent out (say, to Notes administrators or coordinators of that particular application database).

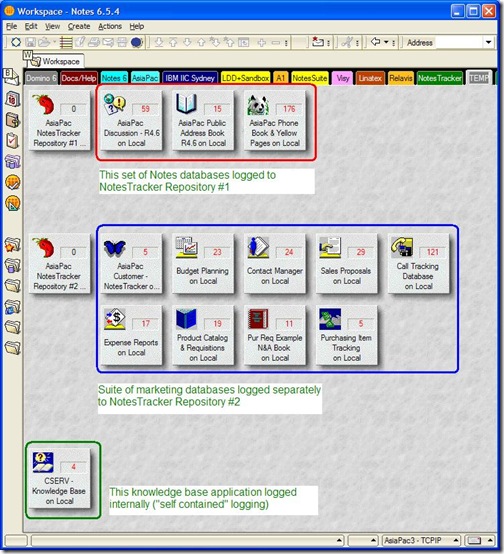

These events are logged as ordinary Notes documents, the same way for both Notes Client or Web browser interactions (no dichotomy here). For a given database replica, you can specify that the usage log repository be the database itself or en external Notes database. With this very generic logging mechanism, you have tremendous flexibility in the way that usage log repositories may be organized, as the following diagram illustrates:

You might take the simplest approach, and send all build usage log documents to a single central repository. The top two groups of applications (circled in red and blue) indicate how you might instead set up a number of different repositories grouped by application category (Marketing, Finance, HR, Manufacturing, or whatever), and at the bottom (circled in green) have any database store its own usage log documents internally. Undoubtedly you would have many more Notes databases than illustrated above, but the same methodology applies.

NotesTracker uses nothing but regular Notes/Domino capabilities. Usage log records (documents) are replicated in the normal fashion between servers, giving a composite organizational usage picture covering both Notes Client and Web browser activities.

How is reporting done? Via ordinary Notes views of course, nothing special. A pre-built set of NotesTracker views are distributed with the SDK, and you can extend or modify these views any way you like, no specialist skills being necessary. Indeed, all of NotesTracker was carefully designed so that no more than a medium level of Notes developer and administrator skills are required for installation, programming and administration (including security).

No end-user training is required whatsoever (indeed, they may not even be aware that NotesTracker capabilities have been added to a database, although there may be legal or organizational policies that require you to inform them that their actions are being tracked).

The build-up of NotesTracker Usage Log documents, and view index overheads

Because NotesTracker is creating usage log document (one document per user interaction), the Notes administrator will need to understand the ramifications: disk space consumed and server CPU workload implications.

Presumably this would be particularly important to monitor for databases where the usage log documents are being created internally (in that database itself) and could have a noticeable effect on view opening performance. It’s probably not so critical for central NotesTracker repositories (particularly if they are placed on a dedicated disk drive), because the usage log documents are being appended to what’s already there and the speed of doing so should be quite fast, though the effect (of rapidly adding many such documents) on view indexing might be considerable. But to stress again, this is “business as usual” in terms of Domino server administrative skills needed.

As a good first rough approximation, for NotesTracker the database size increases at 1.5KB to 2KB per usage log document. The growth rate needs to be monitored, and you should devise an appropriate archive-and-purge strategy if disk space is a worry. How frequently you purge log documents should primarily be determined by the length of time — typically a number of months (or even years) — for which you wish to retain usage metrics.

Of course, it’s not only document contents that take up space in a database. Keep in mind that view indexes will have a major impact on database growth, rather than the relatively small amount of data stored in the log documents. To reduce Notes Client view opening overheads (and Domino server workload needed to maintain the view indexes), the number of sorted view columns has been kept reasonably low. However, you may wish to alter the view designs to decrease the number of sorted view columns even further, or to make other changes that balance view opening times against indexing overheads to your satisfaction.

As a guide, one user of NotesTracker found that some 60,000 Usage Log entries occupied close to 1 GB of disk space, equating to an average of 16 to 17 KB per usage log document. I’m not sure if they removed any of the default views from the repository, or altered any of the views’ indexing properties, both of which could have a big influence on this average. (Naturally enough, other Notes applications could and almost certainly would have quite different characteristics. Your mileage may vary, as the saying goes.)

Disk Space management – the NotesTracker archiving agent

In NotesTracker there is an archive agent that can be run as-required or on a scheduled basis, giving you the control you need to remove historic log records for managing repository database size. The archive agent is discussed a little further on.

Monitoring and Managing Usage Log view indexes

The NotesTracker Repository is distributed with around 35 views. Some views will only ever contain a small number of documents, even down to a single document. Most of the views are based on a selection of Usage Log documents (all of them, or a subset), and might contain tens of thousands of documents depending on the level of activity in your applications and the length of time — weeks or months — that Usage Log records have been stored before being archived.

The set of NotesTracker views provided are configured generally to discard their indexes after 14 days of inactivity, and it’s simple for you to alter these settings if you wish.

You should monitor the NotesTracker view index sizes over time. If there is any view that is used rarely, you should consider setting its view the discard period to a smaller number of days or perhaps even consider removing the view from the Repository.

It’s interesting to note that NotesTracker has a unique method for you to make an extremely quick and simple, standardized modification to the designs of the views in a database, after which you can track individual view usage. This gives you a sound basis for knowing which views are heavily used (and should be retained) and which ones are seldom used (thereby being candidates for being removed from the database’s design). Indeed, one company purchased a NotesTracker license just to do this very thing.

- In his IBM Lotus Notes Hints, Tips, and Tricks weblog, Alan Lepofsky gives a few tips about database sizes. See:

http://www.alanlepofsky.net/alepofsky/alanblog.nsf/dx/local-mail-part-2 - In this second article, Alan describes how view indexes occupy part of the space taken up by a database:

http://www.alanlepofsky.net/alepofsky/alanblog.nsf/dx/size-really-does-matter

show database database_filename

Here’s an example for database notestracker.nsf in subfolder notestracker_v5.1:

But let’s do things a much better way: using the Domino Administrator client to look inside the database. Consider a newly-created NotesTracker Repository database, which we select like this:

The resulting panel “Manage the views of this database” (next image) show as that a group of Usage Tracking views, circled in red, have indexes that are some three or four times larger than other Usage Tracking views (circled in green). The index size difference essentially reflect the complexity of the individual view designs, nothing else. For this exercise, it will be the views circled in red that we focus on., but this has no effect on the overall argument.

As mentioned above, this example database is quite small. It contains only about 900 Usage Log documents and its overall size is only about 14 MB.

Firstly, a new “empty” copy of the database was made, containing no Usage Log documents as a base point. Its size with empty view indexes was less than 4 MB. You will notice that the various view index sizes ranged between 1 KB and 4 KB.

Then normal database activity was carried out for a short while: creating, reading, updating, deleting documents inn other databases. This generated some 6140 Usage Log documents in this NotesTracker Repository database.

Then each of the twelve commonly-used views circled in red in the following image was displayed, causing their indexes to be created. The repository database size increased from 4 MB to 74 MB, and the index sizes (focus on the twelve circled in red) looked like this:

Note that this was somewhat atypical, having a very high disk space percentage used of 99.3% — because this NotesTracker Repository is essentially a logging database, the main activity being sequential adding of Usage Log documents. It is likely that most “normal” databases would in practice have a significant percentage of “white space” (until they are compacted).

Finally, a new copy of this database was made, and its size was reduced to 9 MB (an somewhat easier way to eliminate the view indexes, compared with manually initiating a compaction).

This indicates that the 6140 documents themselves occupied about 5 MB (that is, 9 MB minus the “empty” database size of 4 MB).

We saw a little earlier that with full view indexes the database size was 74 MB, therefore the 6140 documents had view indexes (for 12 views) totaling about This all indicates that each Usage Log document adds, as a simple approximation, about 1 KB per view!

Extrapolating this to thousands or tens of thousands of Usage Log documents obviously will lead to much larger overall Repository size. Obviously the removal of unused Usage Log views could significantly reduce Repository size.

Summary

This brief insight into view index creation should give you a more definitive basis for managing your NotesTracker usage log repository databases. The same general approach can be applied for managing the views in your own inventory of Lotus Notes/Domino applications.

I first learned about Notes in 1993, just into early retirement from IBM. Compared with the lumbering mainframe office systems architecture that IBM had spent a decade or more trying to get off the ground, I was (and still am) struck with the way that “plain vanilla” Lotus Notes and Domino do smart stuff such as replication with simplicity and elegance.

The basic underpinnings of the Notes/Domino document-oriented database architecture are still without peer, and there’s still a big role for it (compared with other platforms, which shall remain nameless, because Ed Brill and others in the Lotus community say quite enough to go around).

Let the battle rage on, competition is good for us all, keeping us all on our toes and leading to improvements all around. Crikey, it’s my 40th year in the IT industry, and I’m still enjoying it — I must be crazy!

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.